Hi! 👋

I am a third year computer science PhD student at Vislang Lab at Rice University, advised by Prof. Vicente Ordonez.

My research focuses on developing general intelligence by integrating visual and linguistic information to enhance world understanding. I am particularly interested in AI Agents, multimodal reasoning, and the application of neuro-symbolic methods.

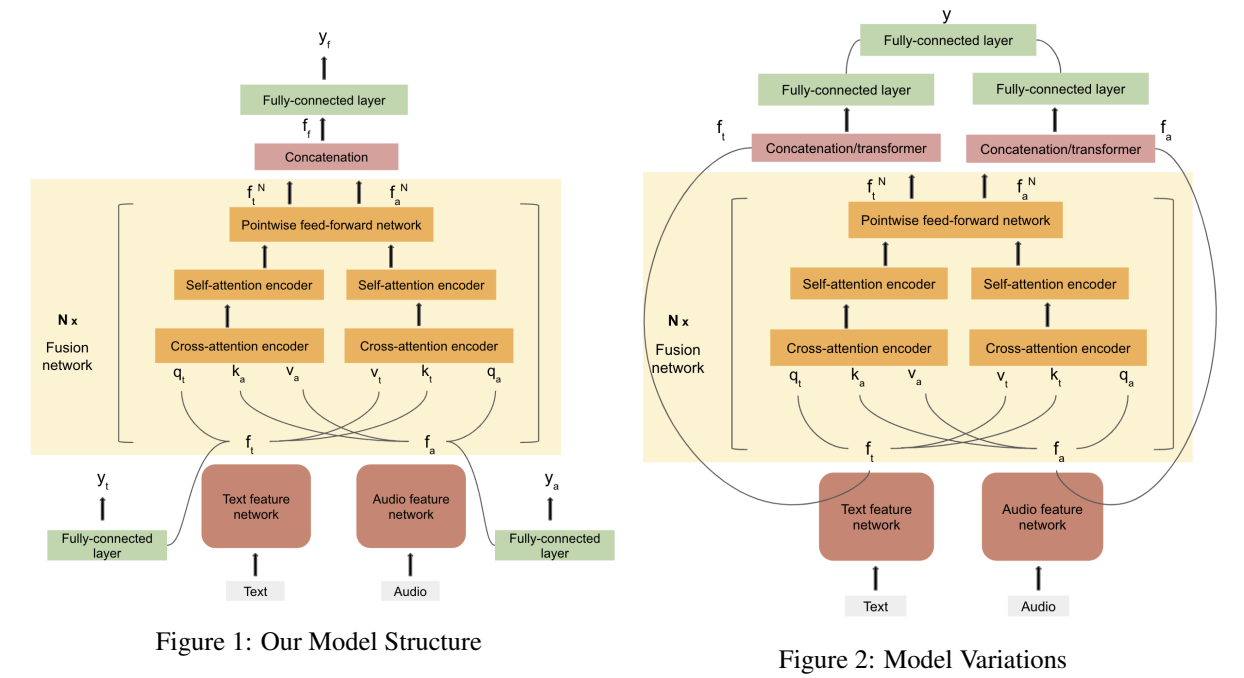

I completed my MS in Computer Science from Columbia University, where I worked on multimodal emotion detection in Speech Lab, advised by Prof. Julia Hirschberg. I have also worked with Prof. Shih-Fu Chang at Columbia University.

Previously, I graduated from Ewha Womans University with B.S. in Computer Science and Engineering, and Scranton Honors Program in 2021, where I was advised by Prof. Dongbo Min and by Prof. Hyun-Seok Park .

🔥 News

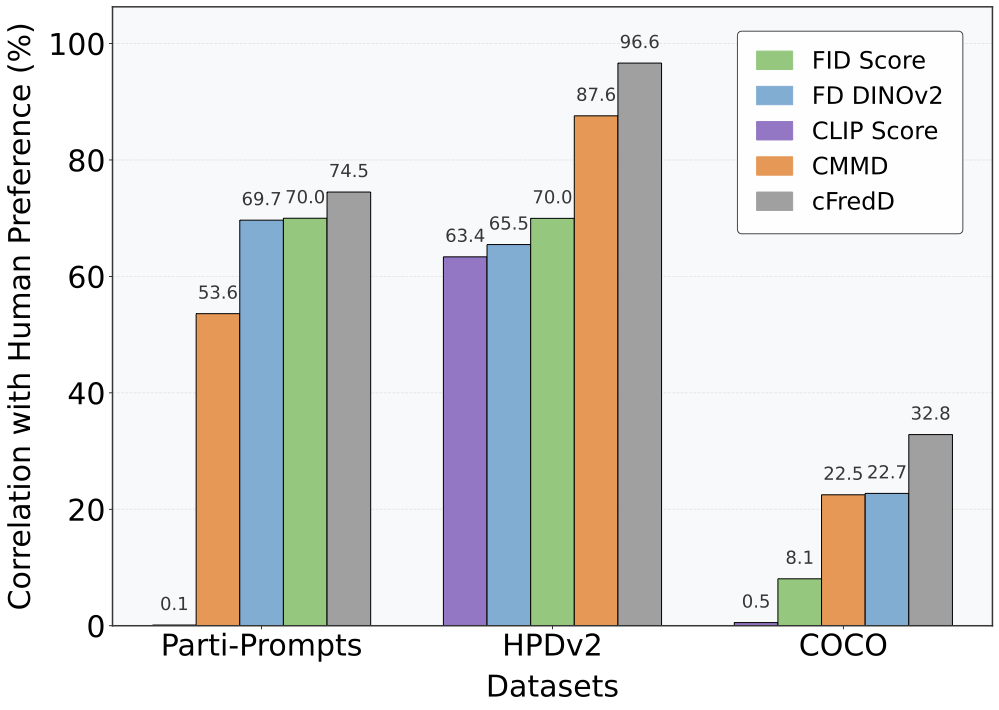

- [09/2025] Our paper “Evaluating Text-to-Image and Text-to-Video Synthesis with a Conditional Frechet Distance” is accepted to WACV 2026. 🎉

- [05/2025] I joined NEC Labs as a research intern this summer.

- [09/2024] Our paper “PropTest: Automatic Property Testing for Improved Visual Programming” is accepted to EMNLP 2024 Findings. 🎉

- [03/2024] Our paper “Multi-Modality Multi-Loss Fusion Network” is accepted to NAACL 2024 (Oral). 🎉

- [12/2023] Our paper “Beyond Grounding: Extracting Fine-Grained Event Hierarchies Across Modalities” is accepted to AAAI 2024. 🎉

📝 Publications

Preprints

Publications

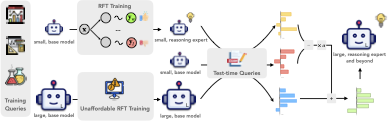

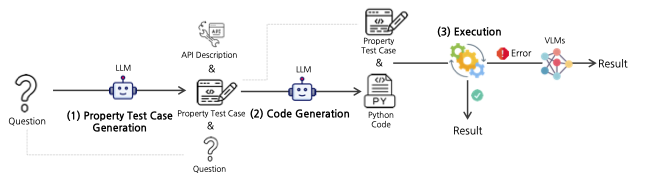

Jaywon Koo, Ziyan Yang, Paola Cascante-Bonilla, Baishakhi Ray, Vicente Ordonez

Findings of Empirical Methods in Natural Language Processing. Findings of EMNLP 2024.

Paper | Project Page

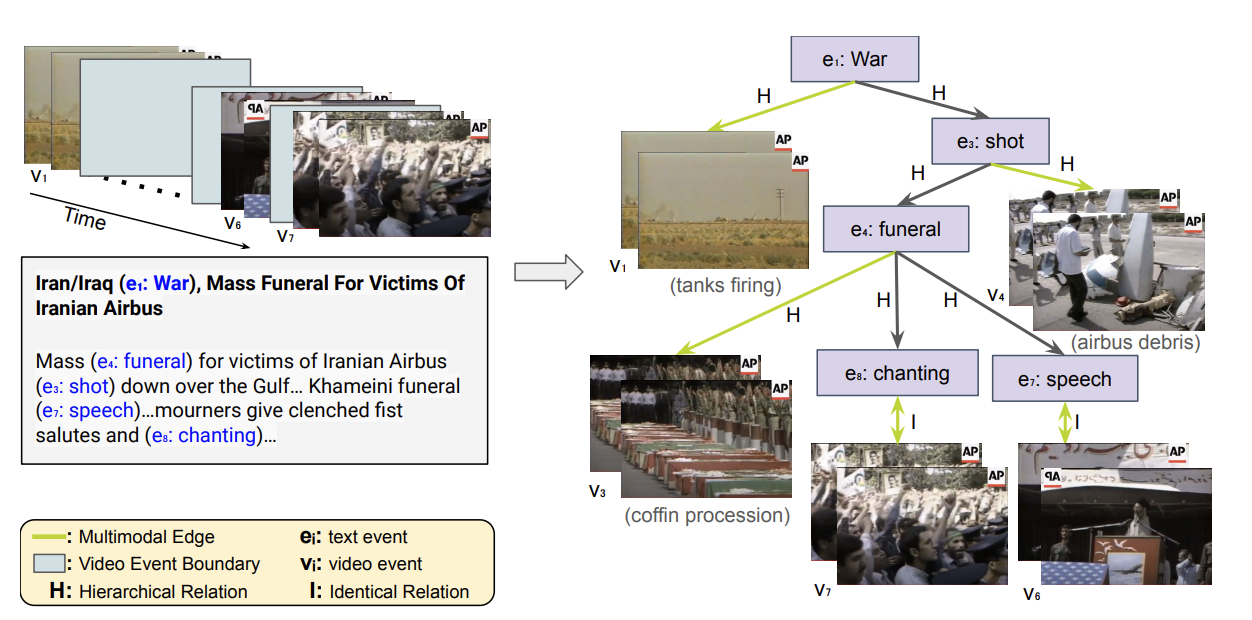

Hammad Ayyubi, Christopher Thomas, Lovish Chum, Rahul Lokesh, Long Chen, Yulei Niu, Xudong Lin, Xuande Feng, Jaywon Koo, Sounak Ray, Shih-Fu Chang

Proceedings of the AAAI Conference on Artificial Intelligence. AAAI-24.

Paper